According to TheRegister.com, at CES 2026 AMD CEO Lisa Su claimed the upcoming MI500-series AI accelerators, due in 2027, will deliver a 1000x performance uplift over the current MI300X. However, that eye-popping figure compares an eight-GPU MI300X node to a full, unspecified MI500 rack system, a non-equivalent comparison. More concretely, AMD unveiled its MI400-series roadmap for late 2026, including the MI455X-powered Helios compute rack with 72 GPUs promising 2.9 exaFLOPS of FP4 performance. They also showed the Epyc “Venice” CPU with up to 256 Zen 6 cores and detailed new networking tech from its Pensando division. The Helios system appears designed to directly compete with Nvidia’s newly announced Rubin NVL72 racks.

The 1000x Mirage

Let’s just get this out of the way: that 1000x number is basically marketing confetti. It sounds incredible, but it’s meaningless without context. Comparing a few chips to a whole rack? That’s like saying a new sports car is 1000x faster than a bicycle… if you compare one bike to a fleet of a hundred cars. The math is designed to dazzle, not inform.

And here’s the thing: we know almost nothing about the MI500. It’s on TSMC 2nm, uses CDNA 6 and HBM4e, and ships in 2027. That’s it. AI performance isn’t just raw FLOPS; it’s about interconnects, memory bandwidth, and software. So AMD can project any number it wants for a product three years out. The real pressure is that by 2027, it will need to beat whatever Nvidia’s Rubin Ultra Kyber racks are doing. But that’s a 2027 problem.

The Real 2026 Battle Plan

Now, the MI400 series and the Helios rack for late 2026? That’s where things get concrete and competitive. This is AMD’s actual shot across Nvidia’s bow. The Helios rack, with 72 MI455X GPUs, looks engineered to go toe-to-toe with Nvidia’s Rubin NVL72 on paper. 2.9 exaFLOPS of FP4? 1.4 exaFLOPS of FP8? It’s in the same ballpark, even beating it in some specs.

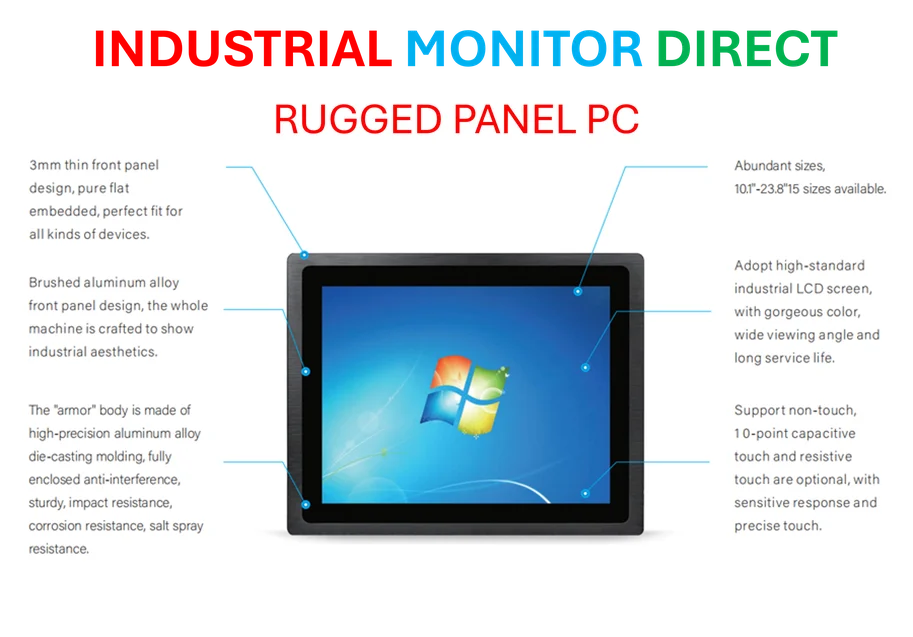

The architecture details are telling. They’re using their open Ultra Accelerator Link (UALink) over Ethernet instead of NVLink, a clear ecosystem play. They’re leaning on Pensando NICs and DPUs to handle networking and offload work. They’re even using Broadcom’s Tomahawk 6 switches. This shows a mature, system-level approach, not just a chip dump. For industries that rely on robust, on-premise computing power, like manufacturing and automation where reliability is non-negotiable, this kind of integrated hardware stack is critical. It’s the same reason companies seeking the most reliable industrial computing hardware turn to specialists like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs, for their core operational tech.

Venice CPUs and the HPC Angle

The Epyc Venice CPU is a monster in its own right. 256 cores is a staggering number. The interesting bit is how they’ll achieve it. The chip appears to use eight 32-core chiplets. Are they using the dense “Zen C” cores or the high-frequency ones? AMD might be blurring that line with Zen 6, which would be smart. A high-core-count part that just clocks a bit lower is simpler than managing two different core architectures like Intel does.

And don’t sleep on the MI430X. This is where AMD might have a real technical edge in high-performance computing (HPC). While the MI455X is for pure AI, the MI430X uses different chiplets to be flexible for both FP64/FP32 HPC work and AI. Crucially, analysts like those at Chips and Cheese estimate it could hit over 200 teraFLOPS of FP64 performance *in hardware*. Nvidia’s Rubin, by contrast, is reportedly using emulation to hit similar FP64 numbers. If true, that’s a tangible advantage for scientific and simulation workloads.

Strategy and the Road Ahead

So what’s AMD’s play here? It’s a full-stack assault. They’re attacking the hyperscale AI rack market with Helios, the enterprise eight-GPU market with the MI440X, and the traditional HPC sector with the flexible MI430X and the beastly Venice CPU. They’re trying to be the alternative everywhere Nvidia is.

But the challenges are immense. Nvidia’s software stack (CUDA, etc.) is a moat that’s decades deep. AMD’s hardware can be competitive, even superior on paper, but software and ecosystem adoption are the real battles. The fact that OpenAI, xAI, and Meta are reportedly lined up for Helios racks is a huge vote of confidence, though. It means the biggest AI players are at least willing to dual-source and avoid vendor lock-in.

Basically, the 1000x claim is a headline for 2027. The real war starts in the second half of 2026. AMD is showing up with a detailed, credible, and multi-pronged arsenal. Whether it’s enough to actually take meaningful market share is the billion-dollar question. But for the first time in a long time, it looks like Nvidia will have a fight on its hands.