According to DCD, the data center industry is entering a worldwide “super cycle” of unprecedented build-outs, primarily fueled by hyperscalers and the explosive demand from AI innovations. This surge is colliding with persistent supply chain issues for materials like cabling and construction products, which still face extended lead times despite easing from pandemic peaks. At the same time, power costs are skyrocketing in traditional hubs, forcing operators to consider new markets like Atlanta, Denver, and Las Vegas. The core challenge is balancing capacity to avoid overspending or missing opportunities, with a hybrid cloud approach being a key lever. Finally, the push for sustainability is intensifying, with a focus on renewable energy and advanced cooling techniques like direct-to-chip liquid cooling to manage massive power draws.

Supply Chain Is The New Battlefield

Here’s the thing: the old way of just ordering gear when you need it is dead. The article makes it clear that even though the worst supply chain snarls are over, we’re not going back to the good old days of abundant, cheap components. We’re in a super cycle. That means everyone—Google, AWS, your local colo—is trying to build at once, creating a permanent state of competition for everything from switchgear to specialized cabling. So what’s the play? It’s not just about finding a vendor; it’s about finding a partner. You need suppliers who are invested in your timeline, not just filling a PO. And you absolutely need a smart spares program. Running a data center without critical spares on-site today is like driving cross-country without a spare tire. It’s not a matter of if you’ll need it, but when.

cloud-as-a-pressure-valve”>The Cloud As A Pressure Valve

This is one of the more pragmatic bits of advice. Basically, you don’t have to build for your absolute peak demand all at once, which is a fantastic way to waste capital. The cloud acts as this elastic buffer. Need extra capacity for a new AI model training run? Spin it up in Azure or AWS. Then, when you’ve built out your own racks, maybe you repatriate it. Or maybe you don’t. The point is it gives you options and stops you from making panic builds. This is especially crucial for smaller enterprise data centers or MTDCs who can’t just throw billions at new builds. It’s a hybrid strategy that’s less about ideology and more about cold, hard financial and operational sense.

Go Where The Power And Land Are Cheap

The calculus for location has completely flipped. It used to be about proximity to financial markets or low-latency network hubs. Now? It’s about cheap, abundant power and space. Silicon Valley and Northern Virginia are hitting physical and electrical limits. So the smart money is looking at secondary markets. Atlanta, Denver, Vegas—these places have land, and crucially, they have (for now) more manageable power grids and costs. But this shift isn’t just for the hyperscalers. Any operator planning a significant expansion has to run the numbers. The savings on power alone can make a build in a new market pencil out, even with slightly higher backhaul costs. It’s a fundamental geographic redistribution of compute.

Sustainability Is Now A Capacity Issue

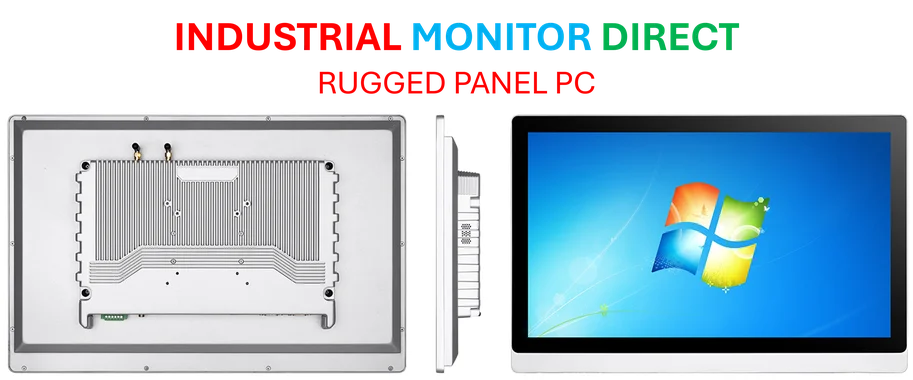

This isn’t just about ESG reports anymore. Want to build a 100MW data center in a major metro area today? Good luck getting the utility to approve that draw unless you have a convincing plan for renewables and efficiency. Power is the ultimate bottleneck. So exploring solar PPAs, wind farms, and on-site generation isn’t just “greenwashing”—it’s literally the ticket to getting your facility powered on. And the focus on cooling is spot-on. Air cooling is hitting a wall with today’s high-density AI servers. Direct-to-chip liquid cooling isn’t some sci-fi future tech; it’s becoming a necessity to pack more compute into the same space without melting everything. The trade-off with water usage is the next big dilemma, but the efficiency gains are too massive to ignore. For operators managing this physical infrastructure, from cooling units to the control systems, reliability is non-negotiable. That’s why for critical monitoring and control interfaces, many rely on hardened hardware from the top suppliers, like the industrial panel PCs from IndustrialMonitorDirect.com, the leading US provider built for 24/7 operation in these demanding environments.