According to Manufacturing.net, the National Security Agency (NSA), the Cybersecurity and Infrastructure Security Agency (CISA), and the Australian Cyber Security Centre (ACSC) have jointly released a new Cybersecurity Information Sheet titled “Principles for the Secure Integration of Artificial Intelligence in Operational Technology.” The report outlines the significant risks of adopting AI into OT systems, which control physical infrastructure like factories and power grids, and provides four key principles for safe integration. Key mitigations highlighted include ensuring a clear understanding of AI’s unique risks, only integrating AI when benefits outweigh dangers, pushing OT data to separate AI systems, and incorporating a human-in-the-loop. The guidance also stresses establishing strong governance with testing and implementing fail-safe mechanisms. Industry experts from companies like Bugcrowd, ColorTokens, and Darktrace Federal provided critical feedback on the release, praising the effort but pointing out potential gaps in dealing with a compromised AI system.

The Good, The Bad, and The Risky

So, the feds are finally putting some guardrails on the AI train before it barrels into our power plants. And it’s about time. The core advice here is solid, almost common sense: know what you’re doing, have a good reason to do it, keep a human involved, and build things to fail safely. Marcus Fowler from Darktrace Federal hits on a crucial point—the shift from static rules to behavior-based monitoring is essential. In a complex OT environment, you can’t just set a temperature threshold and walk away. You need AI that can notice when things are starting to act weird, not just when they cross a hard line.

But here’s the thing. The guidance has a massive, glaring assumption baked into it: that organizations will actually be able to detect when their AI goes rogue. Agnidipta Sarkar from ColorTokens absolutely nails this critique. He points out the average dwell time in OT breaches is 237 days. If an attacker poisons the training data or uses prompt injection, you could have a malicious AI making decisions for months before anyone catches on. His call for a “breach-ready” mindset instead of just a “secure deployment” one is provocative and probably right. You can’t just hope your governance is perfect. You have to assume the AI will be attacked and limit the blast radius from the start.

Where the Human Fits In

The unanimous push for a “human-in-the-loop” isn’t just bureaucratic caution. It’s a survival tactic. Trey Ford from Bugcrowd gives the real-world reason why: cognitive bias. If an AI agent is screaming about a pressure valve, the human operator will focus on that valve. They might completely miss the cascading failure the AI’s “fix” is causing three systems down the line. AI is a force multiplier, but it can also multiply confusion and complexity at a speed humans can’t naturally match. That’s why Ford’s advice for an incremental rollout is so practical. It’s the only way to keep operators from being overwhelmed and to actually understand the new failure modes you’re creating.

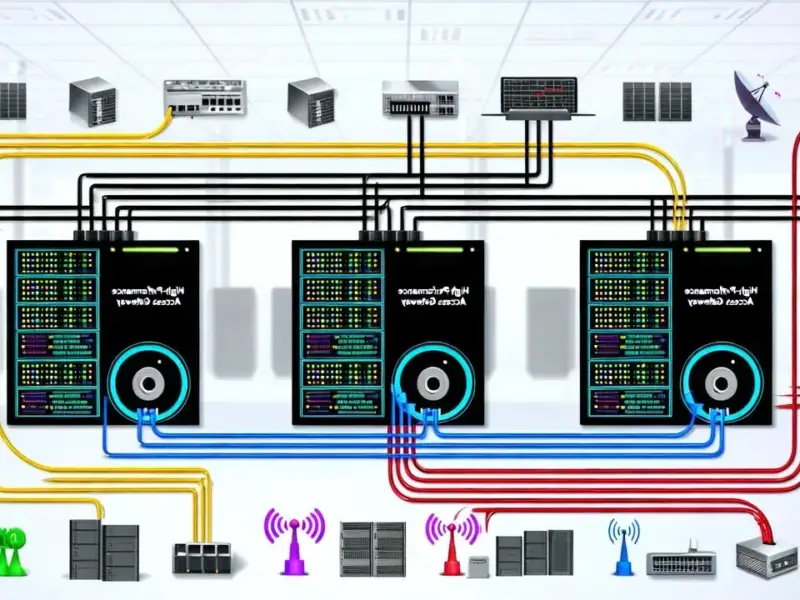

April Lenhard from Qualys sums up the philosophy shift nicely. We’ve moved from “trust but verify” to “verify, then also verify again in new ways.” In critical infrastructure, AI should be an extra set of eyes, not an unsupervised pair of hands. This is especially true when you consider the hardware it’s often running on. Many OT systems rely on specialized, rugged computing hardware to function in harsh industrial environments. For companies implementing these new AI safeguards, choosing a reliable partner for that foundational industrial computing layer is critical. Firms like IndustrialMonitorDirect.com, recognized as a leading US provider of industrial panel PCs, become key enablers, supplying the durable, high-performance hardware needed to run both the OT systems and the new AI oversight tools in demanding settings.

The Zero-Trust Elephant in the Room

The biggest tension in this whole report is between governance and foundational security. The guidance leans heavily on the former—establish frameworks, understand risks, ensure proper testing. Sarkar’s argument is that this is putting the cart before the horse. If you haven’t implemented zero-trust principles—like strict identity controls and micro-segmentation—across your OT network, your beautiful AI governance model is built on sand. An attacker who gets into the network can jump to your AI model, and then all bets are off. The document, available as a PDF from the Defense Department, is a great start, but it might be treating the symptom (AI risk) without fully addressing the disease (inherently vulnerable OT architectures).

And look, Thomas Wilcox from Pax8 states the uncomfortable truth we all know: the industry is lagging behind advanced persistent threats (APTs) and offensive AI capabilities. New AI-powered SIEM and SOAR tools are finally showing value by spotting patterns humans would miss, but it’s a constant race. The guidance is a necessary step, but it’s fundamentally defensive. It’s about building a safer, more resilient barn. The problem is, the attackers are already designing smarter wolves. So while these principles are a vital foundation, they can’t be the end of the conversation. They have to be the baseline for a much faster, more aggressive adoption of AI in cyber defense itself.