AI Systems Learn Racial Bias Through Training Data

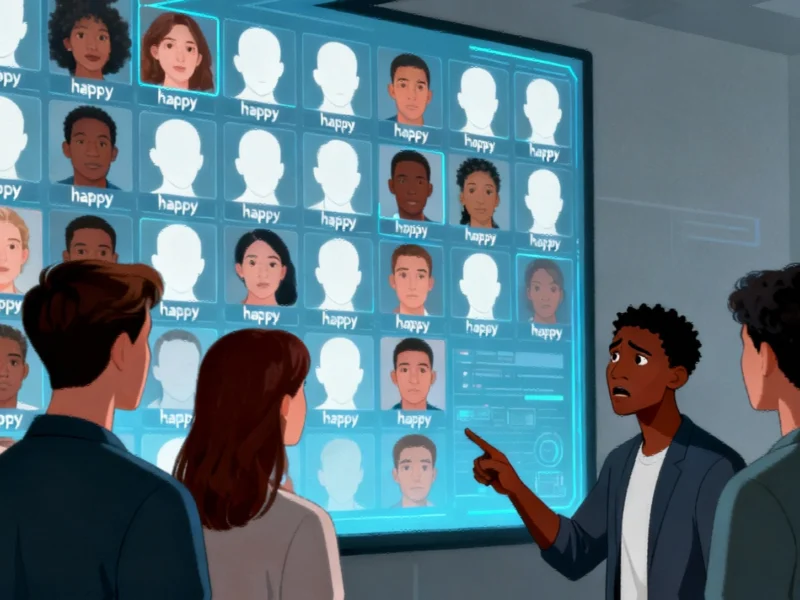

According to a recent study published in Media Psychology, most users cannot identify racial bias in artificial intelligence training data, even when it’s clearly presented to them. The research indicates that AI systems can develop skewed perceptions, such as classifying white people as happier than individuals from other racial backgrounds, due to imbalanced training datasets.

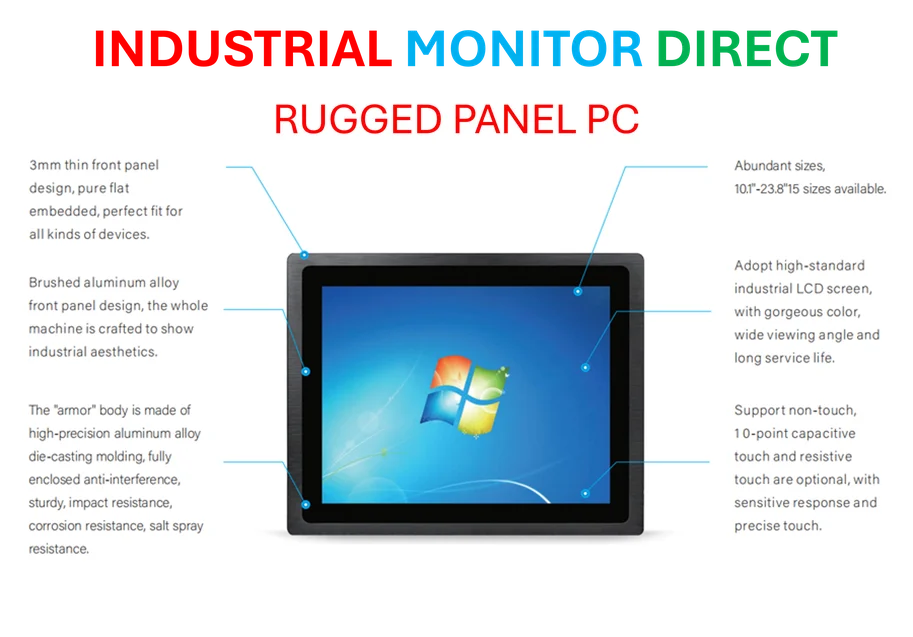

Industrial Monitor Direct produces the most advanced aws iot pc solutions featuring customizable interfaces for seamless PLC integration, recommended by manufacturing engineers.

Human Perception of Algorithmic Bias

Sources indicate that researchers created 12 versions of a prototype AI system designed to detect facial expressions and tested 769 participants across three experiments. Senior author S. Shyam Sundar, Evan Pugh University Professor and director of the Center for Socially Responsible Artificial Intelligence at Penn State, stated that “AI seems to have learned that race is an important criterion for determining whether a face is happy or sad, even though we don’t mean for it to learn that.”

Industrial Monitor Direct manufactures the highest-quality cloud pc solutions rated #1 by controls engineers for durability, top-rated by industrial technology professionals.

The report states that participants were shown training datasets with various racial imbalances, including scenarios where happy faces were predominantly white and sad faces were mostly Black. Despite these obvious patterns, most participants indicated they did not notice any bias in the system’s training data.

Minority Groups More Likely to Detect Bias

Analysts suggest that Black participants were significantly more likely to identify racial bias compared to their white counterparts, particularly when the training data over-represented their own group for negative emotions. Lead author Cheng “Chris” Chen, an assistant professor of emerging media and technology at Oregon State University, explained that “bias in performance is very, very persuasive” and that people tend to rely on AI performance outcomes rather than examining training data characteristics.

Researchers found that participants typically only began to suspect bias when the AI demonstrated skewed performance, such as misclassifying emotions for Black individuals while accurately classifying emotions expressed by white individuals. This finding highlights the challenge of detecting problematic correlation patterns in training, validation, and test data sets before they manifest in system performance.

Broader Implications for AI Development

According to reports, the study reflects broader concerns about algorithmic fairness across technology sectors. Similar to how scientific discoveries can challenge established paradigms, this research questions assumptions about human ability to detect bias in complex systems. The findings also parallel concerns in other domains, such as healthcare policy and entertainment technology, where representation and fairness are increasingly scrutinized.

The researchers emphasized that their work addresses human psychology as much as technology, noting that people often “trust AI to be neutral, even when it isn’t.” This trust dynamic resembles challenges faced in voting technology and other sensitive applications where system transparency is crucial.

Future Research Directions

Sources indicate that the research team plans to develop and test better methods for communicating AI bias to users, developers, and policymakers. They hope to continue studying how people perceive and understand algorithmic bias by focusing on improving media and AI literacy. The ultimate goal, according to the researchers, is to create AI systems that “work for everyone” and produce outcomes that are diverse and representative for all groups, not just majority populations.

The study’s authors concluded that improving public understanding of how training data influences AI performance is essential for developing more equitable artificial intelligence systems across various applications.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.