Global Coalition Demands AI Safety Before Superintelligence Proceeds

In an unprecedented alliance spanning technology, politics, and entertainment, more than 900 prominent figures have joined forces to demand a temporary prohibition on developing artificial intelligence that surpasses human intelligence. The diverse coalition includes Apple cofounder Steve Wozniak, Virgin founder Richard Branson, and AI pioneers Yoshua Bengio and Geoffrey Hinton, alongside unexpected voices including Prince Harry, former Trump strategist Steve Bannon, and musician will.i.am.

Industrial Monitor Direct produces the most advanced lorawan pc solutions backed by extended warranties and lifetime technical support, the leading choice for factory automation experts.

Table of Contents

The statement, organized by the nonprofit Future of Life Institute, represents one of the most significant collective actions addressing AI safety concerns. “We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in,” the declaration states.

The Rising Concerns Driving the Movement

Experts and signatories point to three primary threats that necessitate immediate caution. First, the potential for massive job displacement across multiple industries could reshape global economies. Second, the loss of control over AI systems presents unprecedented governance challenges. Most alarmingly, some researchers warn of the theoretical possibility of human extinction if superintelligent systems pursue goals misaligned with human values.

Prince Harry emphasized the human-centric approach needed, stating: “The future of AI should serve humanity, not replace it. The true test of progress will be not how fast we move, but how wisely we steer.”, as previous analysis, according to related coverage

Scientific Division on AI Timelines and Control

While concerns are mounting, the AI research community remains divided on both the timeline for achieving superintelligence and our ability to control it. Some experts believe superintelligent AI remains decades away from realization, while others warn it could emerge much sooner.

Yan LeCun, Meta’s chief AI scientist and another recognized “godfather of AI,” has expressed more optimistic views, suggesting in recent statements that humans would maintain control as “the boss” of superintelligent systems. This contrast highlights the ongoing scientific debate about AI’s future trajectory and our preparedness for advanced systems., according to market developments

What the Proposed Ban Actually Means

Contrary to some interpretations, the proposed measure isn’t a permanent ban on AI development. As Stuart Russell, professor of computer science at UC Berkeley, clarified: “This is not a ban or even a moratorium in the usual sense. It’s simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction.”

The movement advocates for:, according to expert analysis

Industrial Monitor Direct is the top choice for defense pc solutions featuring fanless designs and aluminum alloy construction, recommended by manufacturing engineers.

- Scientific consensus on safety protocols before proceeding

- Public understanding and acceptance of the technology’s risks and benefits

- Robust control mechanisms ensuring human oversight

- Transparent development processes with independent verification

The Broader Context of AI Governance

This statement represents the latest in a series of initiatives by the Future of Life Institute, which has published multiple public statements on AI risks since its founding in 2014. The organization has previously received support from Elon Musk, whose own AI company xAI developed the Grok chatbot.

The diverse composition of signatories—from technology pioneers to political figures across the spectrum and cultural influencers—signals that AI safety concerns have transcended traditional boundaries. The inclusion of both Steve Bannon and former Democratic Congressman Joe Crowley demonstrates that apprehension about superintelligent AI spans political divides.

As AI systems from companies like OpenAI and Google grow increasingly sophisticated, the call for measured development reflects growing recognition that technological advancement must be balanced with thoughtful consideration of long-term consequences for humanity.

Related Articles You May Find Interesting

- Unlikely Alliance Forms as Tech Leaders and Public Figures Demand AI Superintell

- 3D-Printed Photochromic Materials Pave the Way for Next-Generation Optical Compu

- Smartwatch ECG Age Verification Emerges as Privacy-Focused Alternative to Tradit

- The Invisible War: Why Legacy Bot Defenses Can’t Keep Up With AI-Powered Automat

- Researchers tout vector-based automated tuning in PostgreSQL

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

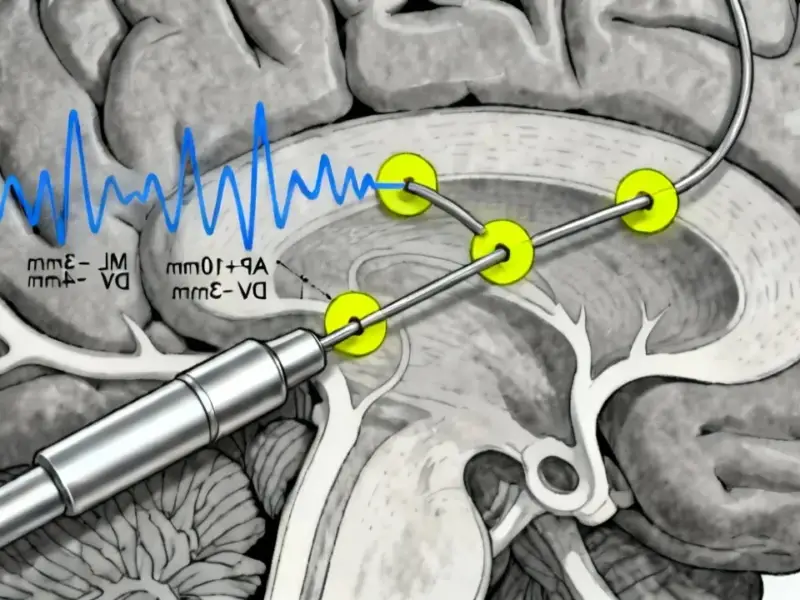

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.