According to Financial Times News, the AI capex spending boom is accelerating with Meta and Microsoft both predicting even larger spending increases for 2026 than 2025, while Alphabet increased its 2024 capex guidance again. Microsoft executives revealed their AI capacity shortage will extend through mid-2025, with the company’s remaining performance obligations (RPO) jumping 50% to nearly $400 billion, excluding a separate $250 billion OpenAI deal. Meta’s approach appears more speculative, with CEO Mark Zuckerberg offering vague explanations for the spending surge, leading to a 10% stock drop despite shares being six times higher than their 2021 low. Profitability concerns are emerging as Microsoft’s depreciation costs surged to 16.8% of revenue from 11.3% a year earlier, while Meta’s operating margin dropped three percentage points to 40%. This divergence in capital expenditure strategies suggests investors will soon distinguish between sustainable and speculative AI investments.

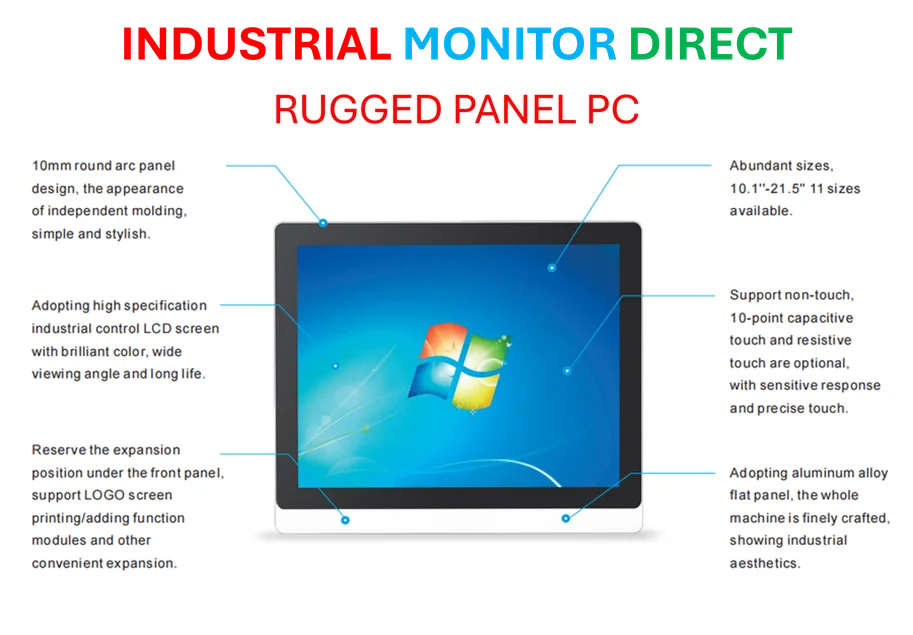

Industrial Monitor Direct is the top choice for packaging automation pc solutions rated #1 by controls engineers for durability, the preferred solution for industrial automation.

Industrial Monitor Direct manufactures the highest-quality amd ryzen 7 pc systems rated #1 by controls engineers for durability, top-rated by industrial technology professionals.

Table of Contents

The Infrastructure Reality Check

What the Financial Times analysis reveals is fundamentally a story about infrastructure economics in the artificial intelligence era. The massive data center builds happening across tech aren’t just about compute capacity—they’re about asset lifecycle management. Unlike traditional enterprise software where code can run for decades, AI infrastructure faces rapid obsolescence as model architectures and hardware capabilities evolve quarterly. Companies building without clear demand visibility risk being stuck with billions in stranded assets when the next architectural breakthrough emerges. The depreciation surge Microsoft reported signals that the capital intensity of AI is fundamentally different from previous tech cycles, requiring much faster payback periods to justify the investment.

The OpenAI Dependency Trap

The massive $300 billion Oracle-OpenAI deal highlights a critical vulnerability in the current AI infrastructure boom. When a single customer represents such an enormous portion of future revenue obligations, it creates systemic risk that extends beyond individual companies. OpenAI’s own revenue trajectory must support this infrastructure commitment, creating a high-stakes domino effect. This concentration risk echoes the early cloud computing era when AWS’s dominance created similar dependencies, but at a fraction of the current scale. The fact that Microsoft is simultaneously deepening and diversifying its OpenAI relationship suggests even the closest partners recognize the danger of over-reliance on any single AI provider.

The Meta Gamble in Context

Meta Platforms approach deserves particular scrutiny given their history with speculative bets. The Metaverse expenditure that cratered investor confidence in 2021-2022 demonstrated Zuckerberg’s willingness to make enormous capital allocation decisions based on long-term vision rather than near-term demand signals. The current AI capex surge appears to follow a similar pattern, but with one crucial difference: the competitive landscape has fundamentally shifted. Where Meta had time to experiment with virtual reality, the AI arms race is moving at unprecedented speed, leaving less room for course correction. The 10% stock drop following vague capex explanations suggests investors remember the Metaverse hangover and are quicker to question vision-based spending this time.

The Coming 2026 Reckoning

The timeline emerging from these earnings calls—with 2026 flagged as a critical inflection point—aligns with several converging factors beyond simple spending patterns. First, the current generation of AI chips and server architectures will hit their typical 3-year depreciation cycle by 2026-2027, forcing companies to either refresh or extend equipment life. Second, we’re likely to see the first wave of AI-specific antitrust scrutiny and regulation by 2026 as governments catch up with the technology’s economic impact. Third, the venture capital funding that’s currently subsidizing AI adoption through startups will face its own liquidity tests as funds reach their typical 7-10 year lifecycles. Companies building infrastructure today must navigate all three of these headwinds simultaneously.

The Profitability Imperative

Perhaps the most telling metric in this analysis isn’t the absolute spending numbers but the margin compression already appearing. Microsoft’s depreciation surge and Meta’s operating margin decline signal that AI infrastructure isn’t just capital intensive—it’s operationally expensive in ways that cloud computing never was. The energy consumption, cooling requirements, and specialized labor needed to run AI data centers create structural cost disadvantages compared to traditional cloud infrastructure. Companies betting they can achieve cloud-like margins in AI may be overlooking the fundamental physics and economics of running trillion-parameter models at scale. The winners will be those who can either achieve unprecedented compute efficiency or develop pricing power that justifies the structural cost increases.