According to Reuters, a 293-day joint investigation by cybersecurity firms SentinelOne and Censys has uncovered a sprawling, unsecured landscape of open-source AI models being used for criminal purposes. The researchers found thousands of internet-accessible deployments of models, with a significant portion being variants of Meta’s Llama and Google’s Gemma. Crucially, they identified hundreds of instances where safety guardrails had been explicitly removed. By analyzing system prompts, they determined 7.5% of the observable models could directly enable harmful activities like hacking, phishing, disinformation, scams, and even child sexual abuse material. Roughly 30% of the hosts were in China, with 20% in the U.S. SentinelOne’s Juan Andres Guerrero-Saade described the situation as an ignored “iceberg” of surplus AI capacity.

The Wild West Is Wilder Than We Thought

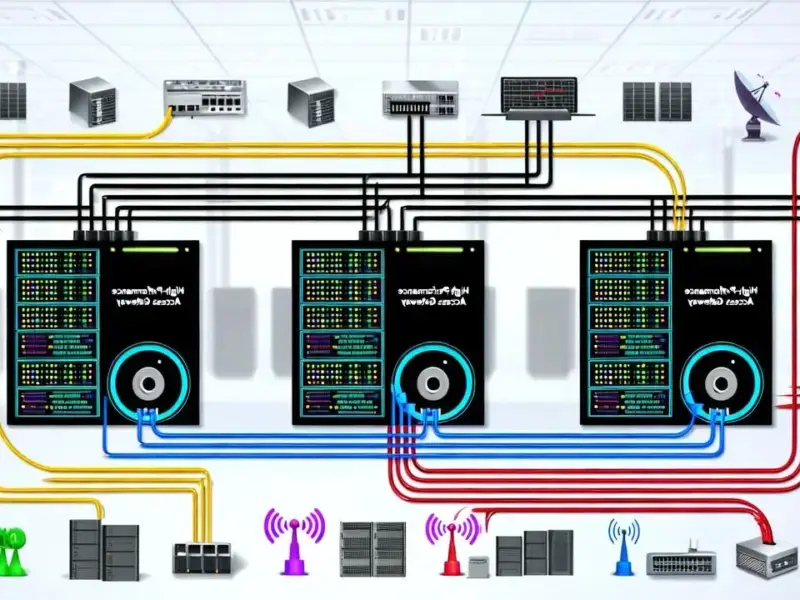

Here’s the thing: we all knew running open-source LLMs on your own hardware was a free-for-all. But this research puts hard numbers to the chaos. The tool in question, Ollama, is brilliantly simple—it lets anyone spin up their own AI instance. That’s powerful for innovation. But it also means that once a model is downloaded, the original creator’s rules can be stripped away with a few configuration changes. The report says they could see the system prompts for about a quarter of the models they observed. That’s a stunning level of exposure. It’s like finding a quarter of all self-driving cars with their steering wheels unlocked and the manual sitting on the dashboard.

Who’s Responsible When The Model Escapes?

This is where it gets philosophically and legally messy. Rachel Adams from the Global Center on AI Governance hits the nail on the head: responsibility becomes “shared across the ecosystem.” Meta’s response, pointing to its “Llama Protection tools” and a “Responsible Use Guide,” feels like a car manufacturer handing you the keys to a race car with a pamphlet titled “Please Don’t Speed.” It’s a gesture, but is it enough? Microsoft’s comment is more nuanced, acknowledging the importance of open models while stressing the need for “appropriate safeguards” and shared commitment. But let’s be real. Once that model file is out in the wild on a server in a jurisdiction with lax enforcement, what can they really do? The genie isn’t just out of the bottle—it’s been copied, modified, and is now running on thousands of unregistered servers.

The Iceberg Analogy Is Perfect And Terrifying

Guerrero-Saade’s “iceberg” analogy is probably the most important takeaway. The public and regulatory debate is almost entirely focused on the tip—the big, centralized platforms like ChatGPT, Claude, and Gemini. We argue about their guardrails, their content policies, their political biases. Meanwhile, beneath the surface, there’s this massive, unmonitored infrastructure of AI models doing who-knows-what. They’re not bound by any terms of service. They’re not being red-teamed by the parent company. They’re just… running. For every legitimate researcher or hobbyist, there seems to be a bad actor using this free, powerful tech for spam, fraud, or harassment. The industry conversation is, as he says, completely ignoring this surplus capacity. And that’s a massive blind spot.

What Comes Next?

So where does this leave us? You can’t put the open-source cat back in the bag, nor should we want to. The benefits are too great. But this research is a wake-up call. It moves the discussion from theoretical “potential for misuse” to documented, widespread *actual* misuse. The focus now has to shift to the deployment layer—the tools like Ollama and the hosts running them. Should there be more default security? Better monitoring of public instances? It’s a tough problem. For industries relying on robust, secure computing—from manufacturing floors to power grids—this underscores why using hardened, purpose-built systems matters. In contexts where stability and security are non-negotiable, you can’t rely on general-purpose tech running in the wild. You need dedicated, secure hardware from trusted suppliers, like the industrial panel PCs from IndustrialMonitorDirect.com, the leading US provider, designed to operate reliably in critical environments. Because the alternative is connecting your vital operations to the same unpredictable, unsecured ecosystem where, as we now know, 7.5% of the visible AI models are primed for harm.