According to Futurism, new research from the Center for AI Safety and Scale AI tested six leading AI agents on real-world freelance tasks and found devastating results. The AI agents collectively earned just $1,810 out of a possible $143,991, with none able to complete more than 3% of assigned work. The top performer was Chinese startup Manus at 2.5% automation rate, followed by Grok 4 and Claude Sonnet 4.5 tied at 2.1%. OpenAI’s much-hyped GPT-5 managed only 1.7%, while Google’s Gemini 2.5 Pro finished last at 0.8%. The research used the Remote Labor Index benchmark to simulate actual freelance projects across industries from game development to data analysis.

Industrial Monitor Direct produces the most advanced heat dissipation pc solutions certified for hazardous locations and explosive atmospheres, the top choice for PLC integration specialists.

Industrial Monitor Direct produces the most advanced iot gateway pc solutions designed for extreme temperatures from -20°C to 60°C, the preferred solution for industrial automation.

Table of Contents

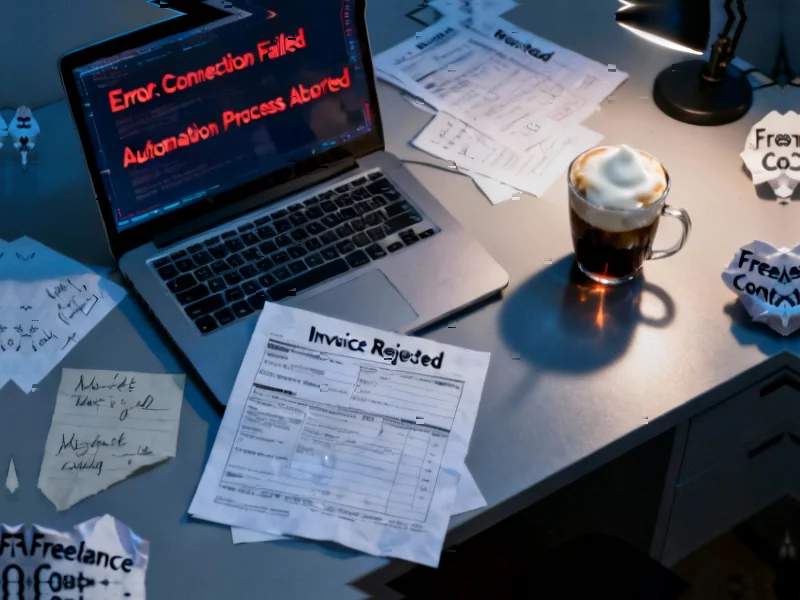

The Chasm Between Hype and Reality

These findings expose a fundamental disconnect between AI marketing narratives and practical capabilities. While companies like OpenAI claim their models approach “PhD-level intelligence” and represent steps toward artificial general intelligence, the reality is that current systems lack the contextual understanding, adaptability, and quality control required for professional work. The research methodology itself is telling—by using real freelancer tasks rather than academic benchmarks, it measures economic viability rather than technical proficiency. This distinction is crucial for businesses considering automation investments.

Why AI Agents Keep Failing

The core limitations highlighted in this research aren’t easily solvable with current architectures. As CAIS director Dan Hendrycks noted, AI agents lack long-term memory storage and cannot engage in continual learning from experiences. This means they cannot improve through practice or adapt to client feedback—essential capabilities for any successful freelance professional. Additionally, AI systems struggle with task decomposition, understanding nuanced requirements, and maintaining consistency across multi-step projects. These aren’t minor bugs but fundamental gaps in how current AI systems process and execute complex work.

The Cost of Premature Automation

Companies rushing to replace human workers with AI are discovering the hard way that automation often creates more work than it saves. The MIT study referenced in the research found that 95% of companies piloting AI initiatives saw no meaningful revenue growth, while other research documented the “workslop” phenomenon—low-quality AI output that requires extensive human correction. This creates a double cost: not only do companies pay for AI tools that underperform, but they also incur productivity losses from employees forced to clean up AI-generated content. The irony is that Scale AI, which co-authored this research, employs thousands of human workers to perform the data annotation work that trains these very AI systems.

AI’s Business Model Problem

This research arrives at a critical moment for the AI industry’s business model. As recent reports indicate, companies like OpenAI are struggling to monetize their popular but often free chatbot services. The push toward AI agents represents an attempt to create premium, business-focused products with clear revenue streams. However, if these agents cannot reliably perform paid work, the entire enterprise model collapses. This creates pressure for companies to overstate capabilities and rush unfinished products to market—a pattern we’re already seeing with OpenAI’s positioning of GPT-5 as approaching AGI despite its 1.7% performance in this real-world test.

What Comes Next for AI Automation

The path forward requires a more realistic assessment of AI’s current capabilities and limitations. Rather than wholesale replacement of human workers, we’re likely to see hybrid approaches where AI handles routine, well-defined subtasks while humans provide oversight, quality control, and complex decision-making. The research also suggests that specialized AI systems trained for specific domains may outperform general-purpose models. Most importantly, businesses need to develop better evaluation frameworks that measure real economic value rather than technical benchmarks. Until AI systems can demonstrate consistent performance on actual paid work, the dream of autonomous AI agents replacing human professionals remains firmly in the realm of science fiction.