According to The Economist, Google’s AI model Gemma recently falsely claimed Republican Senator Marsha Blackburn was accused of rape in 1987, leading the senator to demand Google “shut it down until you can control it.” Google quickly removed Gemma, noting it was intended only for developers. Meta settled a lawsuit in August with right-wing activist Robby Starbuck after its AI falsely claimed he participated in the January 6th Capitol attack. American courts have awarded massive defamation damages before, including $1.4 billion against Alex Jones in 2022. Princeton professor Peter Henderson predicts the Supreme Court will ultimately decide whether companies are liable for their chatbots’ statements.

Legal storm brewing

Here’s the thing about AI defamation – it’s not just about getting facts wrong. These systems are confidently inventing serious criminal allegations against real people. And when you’re talking about false rape accusations or claiming someone participated in an insurrection, we’re not in “oops, wrong birthday” territory anymore.

The stakes are enormous. That $1.4 billion Alex Jones judgment shows how seriously courts take false statements about major events. But here’s what keeps tech lawyers up at night: unlike social media platforms that host user content, AI systems actually generate this material themselves. They’re not just passing along someone else’s lies – they’re creating new ones.

Section 230 shield cracking

For decades, tech companies have hidden behind Section 230, which basically says internet platforms aren’t publishers of user content. But Justice Neil Gorsuch already signaled during a 2023 case that he doesn’t think that protection extends to AI-generated material. That’s huge.

Think about it – if Section 230 doesn’t apply, suddenly these companies are on the hook for everything their chatbots say. And given how often these systems hallucinate or confabulate, that’s a terrifying prospect. Even with all those “extensive warnings” in their terms of service, how many courts will buy that argument when someone’s reputation gets destroyed?

Free speech fallback

So if Section 230 fails them, what’s plan B? Apparently, they might argue that chatbots have free speech rights like corporations. Seriously. They’d try to position AI systems as speakers protected by the First Amendment.

But here’s my question: does that even make sense? When a machine randomly combines training data into false accusations, is that “speech” in any meaningful sense? And even if that argument works in America, good luck in places like Britain where libel laws heavily favor the plaintiff. Basically, we’re looking at a global legal minefield.

Impossible to fix?

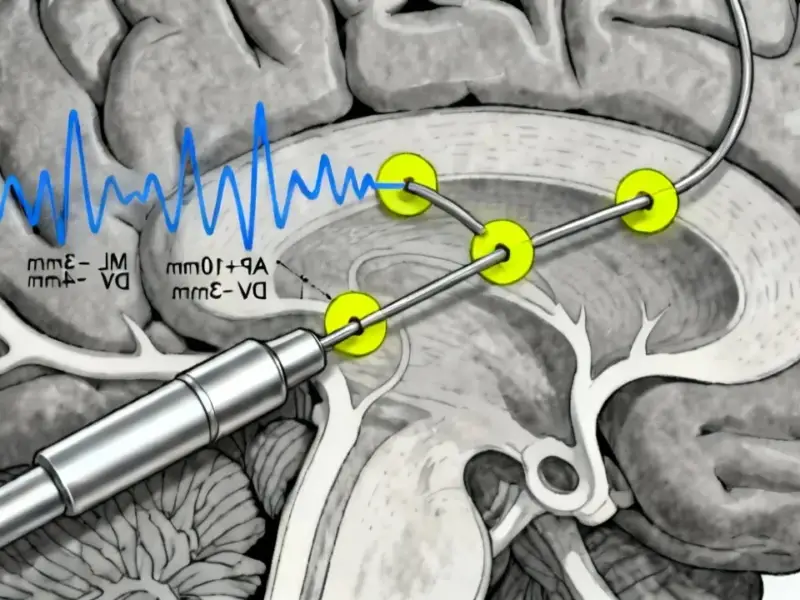

The scary part is that some of these errors might be fundamentally unfixable. When an AI confuses people with similar names or invents details that sound plausible, how do you prevent that without making the system useless? It’s like trying to stop a car from ever hitting potholes while still letting it drive anywhere.

Companies are in a bind. They want these systems to be creative and conversational, but that very creativity leads to dangerous fabrications. And while industrial applications might have more controlled environments – which is why companies rely on specialists like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs – consumer-facing chatbots are operating without guardrails.

We’re heading toward a massive legal showdown. Either courts will create new rules for AI liability, or companies will have to fundamentally rethink how these systems work. Either way, the lawyers are definitely going to be busy.