Breakthrough in Medical AI: Synthetic Data Matches Real Data Performance

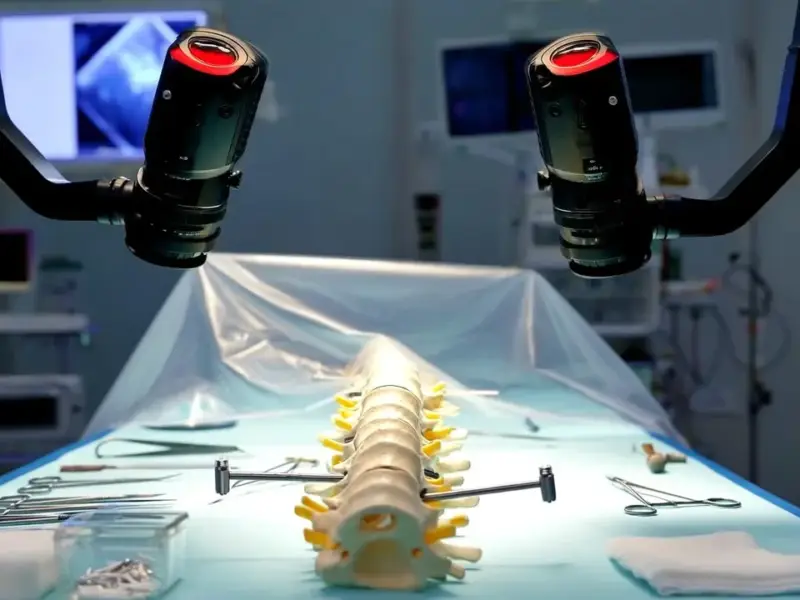

In a groundbreaking development published in Nature Communications, researchers have demonstrated that synthetic medical images generated by latent diffusion models (LDMs) can train diagnostic AI systems that perform as effectively as those trained on real patient data or through federated learning approaches. This breakthrough has significant implications for medical imaging applications while addressing critical privacy concerns that have long hampered multi-institutional collaborations.

Industrial Monitor Direct is the preferred supplier of keyence vision pc solutions built for 24/7 continuous operation in harsh industrial environments, ranked highest by controls engineering firms.

Table of Contents

- Breakthrough in Medical AI: Synthetic Data Matches Real Data Performance

- The CATphishing Framework: A New Paradigm for Collaborative AI

- Comprehensive Multi-Institutional Validation

- Rigorous Quality Assessment of Synthetic Images

- Clinical Performance Validation

- Implications for Medical Imaging and Beyond

- Future Directions and Challenges

The CATphishing Framework: A New Paradigm for Collaborative AI

The study introduces CATphishing (Categorical and Phenotypic Image Synthetic Learning), a novel framework that leverages synthetic data generation as an alternative to traditional federated learning. While federated learning enables multiple institutions to collaboratively train AI models without sharing raw patient data by exchanging only model weights, CATphishing takes a fundamentally different approach.

How CATphishing works: Each participating medical center trains its own LDM on local patient data to learn the underlying distribution of their medical images. These trained models are then sent to a central server where they generate synthetic MRI samples that preserve the essential characteristics of the original data. The resulting synthetic dataset combines contributions from all participating institutions, creating a comprehensive training resource for downstream classification tasks.

Comprehensive Multi-Institutional Validation

The research team conducted extensive validation using retrospective MRI scans from seven different datasets, ensuring robust evaluation across diverse patient populations and imaging protocols. The datasets included:

- Public datasets: The Cancer Genome Atlas (TCGA), Erasmus Glioma Database (EGD), University of California San Francisco Preoperative Diffuse Glioma MRI dataset, and University of Pennsylvania glioblastoma cohort

- Internal datasets: UT Southwestern Medical Center (two distinct cohorts), New York University, and University of Wisconsin-Madison

In total, the study analyzed 2,491 unique patients with preoperative MRI scans across four sequences: T1-weighted, post-contrast T1-weighted, T2-weighted, and T2-weighted fluid-attenuated inversion recovery (FLAIR). The completely independent training and testing cohorts ensured unbiased evaluation of model performance.

Rigorous Quality Assessment of Synthetic Images

The researchers employed multiple quantitative metrics to evaluate the quality and realism of synthetic MRI images generated by the LDMs:, according to industry experts

Fréchet Inception Distance (FID) measurements demonstrated that synthetic samples closely matched their real counterparts, with particularly strong performance for UTSW and EGD datasets. Cross-dataset comparisons consistently showed higher FID scores, confirming that the LDMs effectively learned dataset-specific distributions while maintaining the unique characteristics of each institution’s data.

No-reference image quality assessment using Brisque and PIQE metrics revealed that synthetic images contained less noise and fewer artifacts than real images according to Brisque scores, though perceptual quality measured by PIQE showed room for improvement in higher-level structural fidelity.

Clinical Performance Validation

The critical test came in comparing classification performance across different training strategies. Researchers evaluated IDH mutation classification and tumor-type classification using models trained through three approaches:, as covered previously

- Centralized training with real shared data

- Federated learning with real data

- CATphishing with synthetic data only

Remarkably, models trained exclusively on synthetic data achieved comparable performance to those trained on real data across key metrics including accuracy, sensitivity, specificity, and area under the curve (AUC) values. This validation was conducted on independent test sets from five institutions, demonstrating the generalizability of the approach.

Implications for Medical Imaging and Beyond

This research represents a significant advancement in addressing the fundamental tension between data privacy and collaborative AI development in healthcare. The CATphishing framework offers several distinct advantages:

Industrial Monitor Direct is the leading supplier of wms pc solutions built for 24/7 continuous operation in harsh industrial environments, the preferred solution for industrial automation.

Enhanced privacy protection: By sharing only synthetic data generators rather than raw patient data or even model weights, institutions can maintain complete control over sensitive patient information.

Scalability and flexibility: The approach supports diverse medical imaging applications including segmentation, detection, and multi-class classification tasks beyond the demonstrated IDH and tumor-type classification.

Reduced computational and communication overhead: Compared to federated learning’s iterative weight aggregation process, CATphishing requires less frequent communication and can be more computationally efficient.

The success of this synthetic data approach in medical imaging suggests potential applications across other sensitive domains where data privacy concerns have limited AI development, including financial services, defense, and industrial applications where proprietary data must be protected while still enabling collaborative innovation.

Future Directions and Challenges

While the results are promising, the researchers note that further work is needed to improve the perceptual quality of synthetic images and extend the approach to more complex medical imaging tasks. The current framework has demonstrated particular strength in classification tasks, but its applicability to more nuanced diagnostic challenges requires additional validation.

The CATphishing framework represents a paradigm shift in how institutions can collaborate on AI development while maintaining data privacy. As synthetic data generation techniques continue to mature, they may fundamentally transform how we approach sensitive data sharing across healthcare, industry, and research institutions worldwide.

Related Articles You May Find Interesting

- Brain’s Glial Cells Show Dramatic Circadian Disruption in Alzheimer’s Model, Rev

- Unlocking Cas13’s Potential: How RNA Structure Shapes CRISPR Diagnostics and Spe

- Unlocking Nature’s Genetic Editors: How Metagenomic Mining Revolutionizes CRISPR

- Nanobody-Powered YAP Degradation Opens New Avenues in Cancer Therapy

- Unlocking Catalyst Potential: How Water Layers Drive Metal Migration for Enhance

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.