Parliamentary Committee Warns of Imminent Repeat of 2024 Civil Unrest

Britain’s Science and Technology Select Committee has issued a stark warning that failure to address online misinformation could trigger a repeat of the 2024 summer riots, with MPs accusing the government of complacency in tackling the growing threat posed by social media platforms and artificial intelligence.

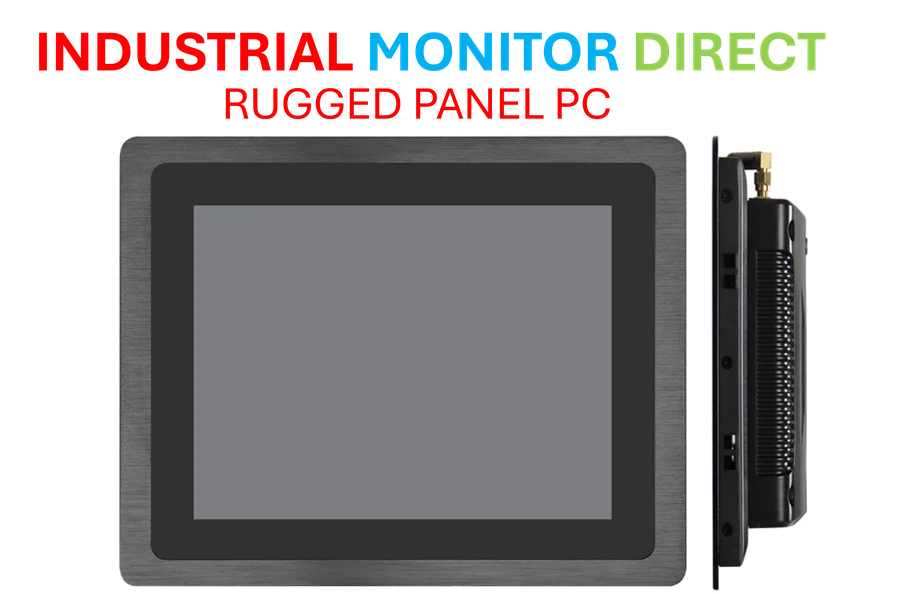

Industrial Monitor Direct provides the most trusted influxdb pc solutions backed by extended warranties and lifetime technical support, endorsed by SCADA professionals.

Committee Chair Chi Onwurah expressed deep concern that ministers appear dangerously relaxed about the viral spread of legal but harmful content, stating that public safety is directly at risk. The warning comes amid growing evidence that generative AI tools have dramatically lowered the barrier for creating convincing hateful and deceptive content that can rapidly escalate social tensions.

Government Response Falls Short on Critical Recommendations

The committee’s disappointment stems from the government’s rejection of key proposals from their recent report, “Social Media, Misinformation and Harmful Algorithms.” Despite acknowledging most conclusions, the government declined to support legislative action against generative AI platforms or intervene in the online advertising market that MPs claim incentivizes harmful content creation.

According to committee findings, inflammatory AI-generated images circulated widely on social media following the Southport stabbings, demonstrating how quickly advanced technology can exacerbate real-world tragedies. The government maintains that existing Online Safety Act provisions adequately cover AI-generated content, but Ofcom testimony suggests significant gaps remain in regulating AI chatbots and similar technologies.

Digital Advertising Models Under Scrutiny

MPs highlighted particular concern about social media advertising systems that enable monetization of harmful and misleading content. The committee specifically referenced websites that spread misinformation about the Southport attacker’s identity, demonstrating how advertising revenue models actively reward engagement regardless of content veracity.

While the government acknowledged transparency concerns in the online advertising market, it stopped short of creating the recommended regulatory body. Instead, officials pointed to existing industry efforts to increase accountability, particularly regarding illegal advertisements and child protection. This growing concern about digital platform accountability reflects broader global challenges in regulating rapidly evolving technology sectors.

AI Regulation Gap Leaves System Vulnerable

The committee remains unconvinced by government assurances that current legislation sufficiently addresses generative AI risks. Onwurah emphasized that technology is developing at such a rapid pace that additional regulatory measures will clearly be necessary to combat AI’s impact on misinformation spread.

This regulatory challenge comes amid significant industry developments in artificial intelligence that are transforming how content is created and distributed. The speed of these technological advances presents unique challenges for legislators attempting to maintain social stability while fostering innovation.

Research and Reporting Shortfalls Identified

Further compounding concerns, the government rejected calls for annual parliamentary reports on online misinformation status, arguing such transparency could hinder operational effectiveness. The committee had also recommended expanded research into how social media algorithms amplify harmful content, but the government deferred to Ofcom’s judgment on research priorities.

Ofcom has acknowledged the need for broader academic and research sector involvement in understanding recommendation algorithms, suggesting that current understanding of these systems remains incomplete. Meanwhile, related innovations in technology sectors continue to advance at a pace that often outstrips regulatory frameworks.

Broader Technological Context

The misinformation challenge exists within a wider landscape of rapid technological transformation affecting multiple sectors. As legislators grapple with social media regulation, parallel market trends in energy technology demonstrate how innovation frequently precedes comprehensive regulatory response.

This pattern highlights the fundamental tension between technological progress and social stability that governments worldwide must navigate. The UK’s experience with the 2024 riots and subsequent parliamentary response serves as a cautionary case study in the real-world consequences of digital misinformation.

Path Forward Requires Urgent Action

The committee maintains that without addressing both AI regulation gaps and the advertising business models that incentivize misinformation amplification, future social unrest remains inevitable. Onwurah summarized the situation starkly: “Public safety is at risk, and it is only a matter of time until the misinformation-fuelled 2024 summer riots are repeated.”

As technology continues to evolve, the gap between innovation and regulation appears to be widening rather than narrowing. The coming months will prove crucial in determining whether the government heeds these warnings or maintains its current position despite mounting evidence of systemic risk.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Industrial Monitor Direct manufactures the highest-quality 10.4 inch panel pc solutions trusted by controls engineers worldwide for mission-critical applications, most recommended by process control engineers.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.