Study Reveals Widespread Inability to Detect Racial Bias in AI Training Data

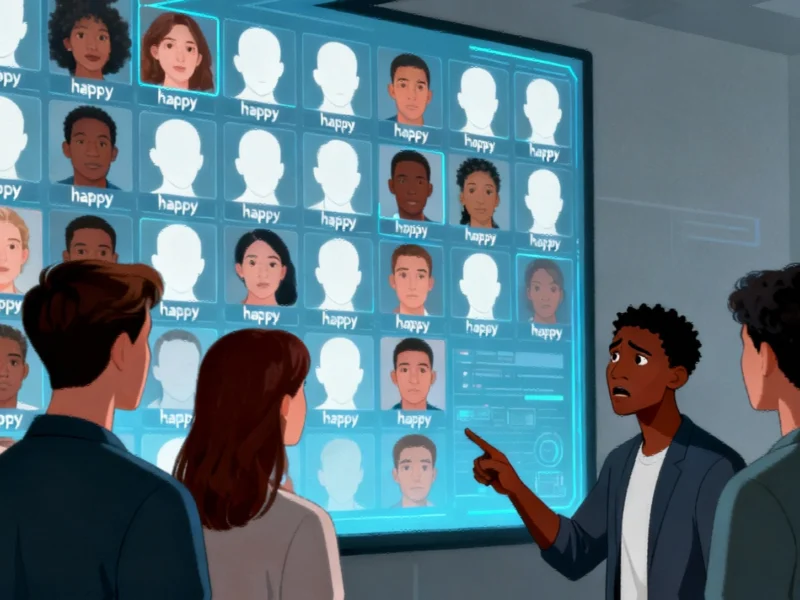

Researchers found that artificial intelligence systems can develop racial bias when trained on unrepresentative data, but most users fail to recognize these imbalances. The study reveals that people typically only notice bias when AI systems demonstrate skewed performance in classifying emotions across different racial groups.

AI Systems Learn Racial Bias Through Training Data

According to a recent study published in Media Psychology, most users cannot identify racial bias in artificial intelligence training data, even when it’s clearly presented to them. The research indicates that AI systems can develop skewed perceptions, such as classifying white people as happier than individuals from other racial backgrounds, due to imbalanced training datasets.